For those of you looking for the latest release of the script… it is always available here:

Microsoft recently had a great post on “how they do IT” - and more specifically how they are using Peer Cache in their environment. You can read their post here. There is a lot of good information in that post - seriously, if you haven’t read it, take a minute to look over what they’re doing. The purpose of this post isn’t to re-hash what they’re doing. It’s to take a look at what they’re doing and look at other methods for managing which devices are Peer Caches sources.

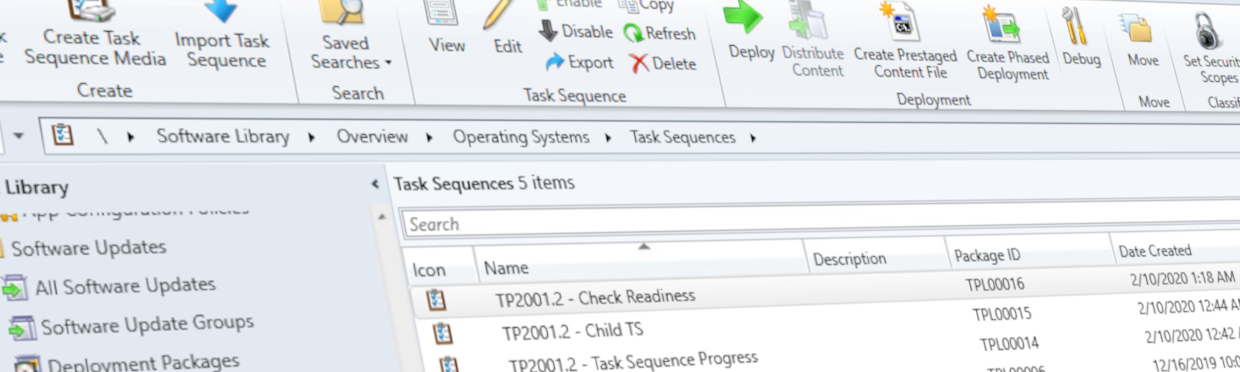

The Microsoft Approach - ConfigMgr Queries

If you didn’t read the article I linked above (shame) then here is the quick and dirty version. Microsoft IT decided that their current goal is to enable as many devices as reasonably possible as Peer Cache sources. They choose to exclude:

- Laptops

- Wireless Connections*

- VMs

- High-Level Executives

* Their query for wireless devices may not be accurate, or was not shared as part of their blog post.

You then create a collection (or collections if you want to separate it by location or boundary) using the filtering query and then apply the appropriate Peer Cache device policy to that collection. Easy peasy. In the end, when combined with BranchCache, they are seeing only 10 - 20% of content requests coming from their distribution points (paraphrasing). Ultimately this is getting them closer to their end goal of removing all distribution points from their environment (awesome).

The Pros

For the most part this is the simplest way to get everthing setup. And with the advent of the fast communication channel for clients, the 7.5 minute fallback for offline sources is no longer a concern.

The Caveats

The main caveat to this method is for offices with not enough devices that meet the minimum qualifications (they specifically call this out in the article as well) they have to modify the query to get the number of devices on par with what they are expecting. This could be quite time consuming if you have a lot of remote offices with few devices.

Another caveat to this method is that the only way you will really know if an office has “enough” devices is by reviewing by naming convention (if you’re a company that names based on location) or by splitting the Peer Cache source collections into regions/locations so that you can monitor the collection count.

A Different Approach

Before the Microsoft blog post came out I had been researching the recommended practices for Peer Cache for my current client as they have a number of remote locations that are too small to warrant their own distribution point. The overwhelming recommendation from many was to limit the scope of your Peer Cache sources to a few machines per location/boundary group. I suspect the main reason for this was the fallback time (7.5 minutes) before fast channel communication was implemented.

Additionally devices which switched boundary groups frequently posed serious risk too. As detailed in my last blog post before some changes were made to the client in 1806, boundary group changes could take up to 7 days by default unless you were more aggressive with heartbeat discovery.

With that said the recommendation to the client was “pick a few devices per location to be your peer cache sources” - to which their reply was “how do we manage that from a device lifecycle perspective?”

So I looked for a solution online and my google skills came up short. Which in most cases means it doesn’t exist (I’m not perfect, but I’m a hella good googler). So I set about setting goals for a new script:

- The script needs to “pick” the best machines from collections to be Peer Cache sources knowing that the collections will be based on existing Boundary Groups or at least locations with LAN communication.

- The script should check to make sure the device is still valid (name resolvable in DNS, reporting active status in ConfigMgr or responding to ping).

- The script should prioritize certain qualities of a device (SSD/NVMe based storage, WLAN service disabled, remaining free space, etc).

- The script should track the status of a device long term and remove it from the pool if it is no longer valid - or has been unreachable a number of times.

- The script should provide ample logging so that an administrator can monitor the status of each collection for a “minimum” number of Peer Cache sources.

After a few weeks of development and testing I’m ready to share it with the community.

The Pros

The script maintains a “database” (read: XML file) that tracks the Peer Cache sources and their current status (since the last time the script was run in “delta” mode). This allows us to remove devices that are unreliable over the long term and replace them with a device that may be more reliable. All automatically.

It’s highly configurable - minimum hard drive space, minimum memory, excluding laptops, logging level, RBAC ready (setting the limiting collection for the “Peer Cache sources” collection - more about this later), and more in an INI file.

The Cons

Because of the reliance on WMI queries to gather info about the devices it’s recommended that the script be run from the primary site server. You can run it remotely, but there are about 10 WMI queries per device that will be run - so if you had 100 devices that’s 1000 queries that will be run remotely on the first run of the script.

It’s not as easy to setup as a single collection query, but is pretty much maintenance free once it’s setup.

Using the Script

First things first… the legalese.

THE SOFTWARE IS PROVIDED “AS IS”, WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE.

The script is pretty innocuous - in such that it doesn’t make any modifications to the ConfigMgr settings/hierarchy with the exception of creation of a collection and adding/removing devices from that defined collection. So it should be pretty safe to run against your environment.

As always though… test test test.

Requirements

The script has the following requirements:

- PowerShell 3 or later (although testing has been peformed exclusively on PS5)

- ConfigMgr Console installed (for the PowerShell cmdlets)

- Administrative privileges to the primary site server for WMI, as well as rights to create and manage collections in your ConfigMgr site.

- It’s best to run it on the primary site server due to the number of WMI calls made per device for scanning (approximately 10). So technically this is (OPTIONAL). HOWEVER, if you choose to run this remotely, make sure that you have the appropriate firewall and remote WMI configuration enabled on the primary site server.

***EDIT***

The BIGGEST requirement to this script IF you want to make use of the SSD/NVMe prioritization is that you need to add Win32_DiskDriveToDiskPartition and Win32_LogicalDiskToPartition to your hardware inventory. These are NOT default classes so you will need to add them - instructions for how to do this are located here. You MUST check the “Recursive” box for these classes to be seen from the Add dialog.

Acquiring The Script

You can get a copy of the script by visiting it’s GitHub repository located here. While it’s not necessary to download the txt or xml files, it’s probably not a bad idea to do it anyways. Store all of the files in a location where you want to run the script. You’ll be setting this script up as a scheduled task so make sure it’s not in a temporary storage location.

For the purpose of this post, we’re going to store the script at C:\OPCS\

Editing The Configuration

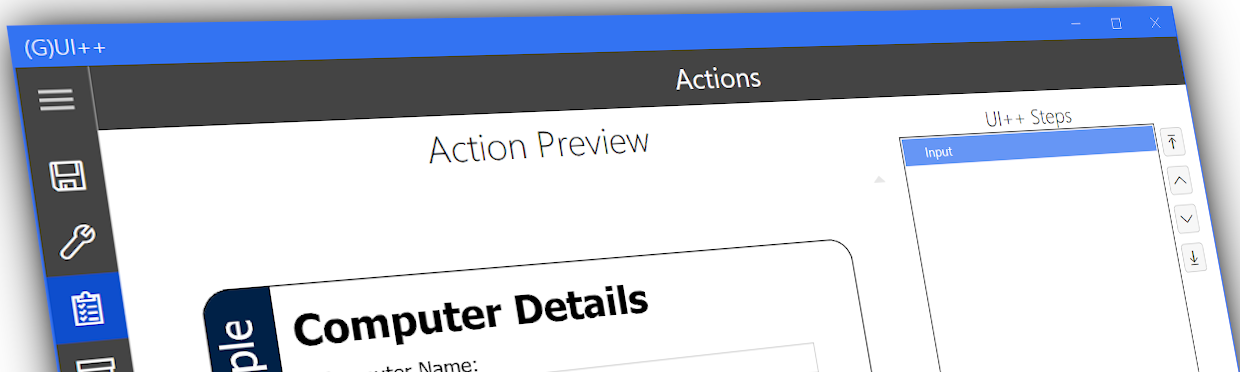

Everything is set with an INI file - or at least a hacky attempt at one (don’t try putting semi-colons after data… it won’t comment it out.) You have the following settings available to you:

- SiteCode: The Site Code for your primary site

- SiteServer: The FQDN of the primary site server

- InitialRun: “true” or “false”. Should be “true” on the first time you run the script or if you want to reset the “database”

- PeerCacheSourcesPerCollection: An integer value for the number of Peer Cache sources you’d like per location/collection.

- DeviceScanType: “ping” or “configmgr” - determines if the script bases the status of the device on a ping test (Test-NetConnection) or a WMI call to the ConfigMgr primary site for the online status of the device. I’d recommend the latter due to the speed benefits you get and the added benefit of knowing the device has a valid ConfigMgr client installed.

- MaxPeerCacheWarnings: The number of times a Peer Cache source can fail the connection test before it is blacklisted. Helps keep unreliable devices from being Peer Cache sources for too long.

- IgnoreBlacklist: “true” or “false”. This will ignore the blacklist and reconsider those devices IF the number of Peer Cache sources falls below the defined threshold (PeerCacheSourcesPerCollection).

- PCCName: The name of the collection you want to create and add Peer Cache sources to.

- PCCLimitingCollectionName: Only necessary if you don’t want to limit the PCCName collection to “All Systems” - might be necessary if you want to manage the collection manually in an environment where collections are limited via RBAC.

- MinMemory: Minimum memory for Peer Cache sources in MB

- MinHardDrive: Minimum hard drive space for Peer Cache sources in GB

- IncludeLaptops: “true” or “false”. Allows Laptops to be Peer Cache sources.

- IncludeVMs: “true” or “false”. Allows VMs to be Peer Cache sources.

- LoggingEnabled: “true” or “false”. Enables or disables logging via file.

- LogFilePath: Defines the path to the log file.

- LogFileAppendDate: “true” or “false”. Enables or disables appending YYYY-MM-DD to the log file name (useful for automated cleanup).

- Debugging: “true” or “false”. Adds more verbosity to the log file.

A brief definition of these options are also laid out in the INI file from the GitHub repo. They’re also better organized. ¯\(ツ)/¯

Formatting the Command Line

The script exposes a few configuration items which I thought were better suited as parameters rather than values in the settings file. Probably a momentary lapse of judgement (actually… probably laziness) for a couple of them, but suffice it to say here are your parameters:

- WhatIf: This should be self-explanatory. Enables What If support for the script.

- SettingsPath: The path to the settings file you want to load. Defaults to __OPCSSettings.ini in the script root folder.

- DataPath: The path to the XML “database” that you want to load or create. Defaults to __OPCSData.xml in the script root folder.

- CollectionFile: The path to the file containing a new line separated list of collection that you want to scan for eligible Peer Cache sources. This should be a list of collections based on LAN communication or at least boundary group configuration (since Peer Cache sources use Boundary Groups to share content to unless otherwise configured for subnet). Defaults to _BoundaryCollectionNames.txt in the script root folder.

- BlacklistFile: The path to the file containing a new line separated list of devices by FQDN that are blacklisted from being Peer Cache sources. Defaults to _BlacklistedDevices.txt in the script root folder.

- ExcludeFile: The path to the file containing a new line separated list of devices by FQDN that are excluded from being Peer Cache sources. This is where you would add devices that you NEVER want to be considered, even if you’re ignoring the blacklist (setting in the INI file). Defaults to _ExcludePeerCacheSource.txt in the script root folder.

Yes I’m obsessed with underscores if you didn’t catch that in the defaults.

A typical command line might look something like this:

Powershell.exe -ExecutionPolicy Bypass -NoProfile -File "C:\OPCS\Optimize-PeerCacheSources.ps1"

Your First Run

First things first - you need to make sure you have a list of collections based on LAN communication or Boundary Group. This might be challenging if you have a lot of weird ranges that don’t fit into subnets (since there is no good way to create collections from IP ranges), but it is a crucial step to ensuring that this works. Add this list of collections separated by line to the collection file - which by default is _BoundaryCollectionNames.txt.

You should run the script manually the first time. Set the “InitialRun” setting to “true” in the configuration file and make sure the rest of the settings are to your liking. While I’d recommend running the script in “WhatIf” mode first, if you don’t the change is easily reversible by deleting the created collection and deleting all the content from the Blacklist and Exclude files.

After the script completes (in non-“WhatIf” mode), we can now set the “InitialRun” setting back to “false”. This will set the script to run in “Delta” mode for future executions.

Setting Up The Scheduled Task

You should be pretty familiar with this process if you’re a system administrator. I’m not going to go over the process for creating a new scheduled task - there are great posts on that already. You can find many by searching….

Here are a couple of tips:

- The script needs administrative rights to run if it’s running from the Primary Site directly.

- I’d recommend running the script once a day for most environments. It’s pretty easy on the CPU.

- If you have A LOT of collections to manage, you might consider splitting them into separate sets - each will need it’s own “database” and list of collections. But then you can “multithread” your process by creating multiple scheduled tasks so that it runs faster.

Closing Thoughts

I will be the first to tell you that this script is definitely not bug free yet. If you use it in an environment and find a bug, please submit an issue to the GitHub repo (or contact me via the link in the menu). I will work diligently to eradicate any bugs you find as I want this to be valuable to the community. Additionally, if you have any feature requests please also submit those to the GitHub repo. I’ve done my best to consider a number of situations where this would be used, but I’m sure that there are many things that the community has to offer as suggestions.

I hope you all have a Merry Christmas (or happy holiday) and as always…

Happy Admining!

Share this post

Twitter

Facebook

Reddit

LinkedIn

Email